“When it’s that widespread, it’s a culture. It’s not just an individual student. It is so many. And when I talk to some undergrads, they’re like, ‘Everybody does it.’” Dr. Amy Clukey

Introduction

As Artificial Intelligence (AI) continues to spread like a parasite through our evolving society, it, of course, has found a perfect host in the student. Not only has it infected every aspect of the student’s academic integrity, but also the personhood and the liveliness of the student, rendering them into a soulless and empty shell of a human being. The individual is arguably no longer a student but a pathetic machine whose sole purpose is to regurgitate the works of someone, or something, else and pass it off as their own, without remorse or concern, and with a sense of entitlement that “I did this.” It’s terrible, it’s depraved, and it’s pervasive. How can we cope? How can we rebound as a society? Is there a way? Is it without hope?

As it stands, I am no fan of Artificial Intelligence in the classroom. Not only a critic but an active hater, someone who seethes at the mere discussion of using ChatGPT for “ideas” and refuses to accept any reasoning from another party. This is where I’m deeply flawed. My cynical repulsion of Artificial Intelligence in the classroom is the unpopular opinion that people poke fun at, believe is outdated, and makes me unlikeable. I do not take this stance to be different or to have a superiority soapbox moment. I do not care if this opinion isolates me or makes me undesirable to others. I am okay with that. I know I’m not doing myself any favors, that I should give AI a chance or simply be open to coming around on it. But I refuse. My awareness of my hypocrisy is present. With that, I have no interest in changing how I feel, which is uncomfortable and, for lack of a better term, lame. Am I unfit for the classroom? Is my pessimism going to bulldoze my hopes of being a good educator? Will I be shunned for being this way, from colleagues and students alike?

Artifact Review

What makes a professor? Is there room for educators in the classroom moving forward? Addressing the prominence of Artificial Intelligence in higher education is paramount to maintaining accredited institutions and the sanctity of academia itself. To investigate first-hand experience for this admittedly avant-garde research paper, I will examine a series of artifacts. These will include pieces from Dr. Amy Clukey, a Professor of English at the University of Arkansas, who has archived her positions on Artificial Intelligence in the classroom frequently on X, formerly known as Twitter.

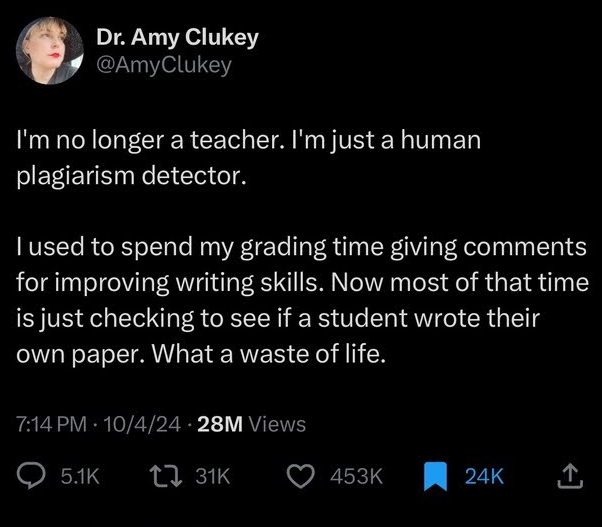

I reached out to Dr. Amy Clukey directly via email for more of her input on the topic. She kindly responded with a The Chronicle of Higher Education article she was recently featured in with additional content. I posed a series of questions and requested a direct, exclusive quote from her but did not hear back. In her X posts, Clukey states, “I am no longer a teacher. I’m just a human plagiarism detector.” This signals that the role of the professor is now changing in academia; the job description has altered to include some sort of AI defense or, conversely, ignorance.

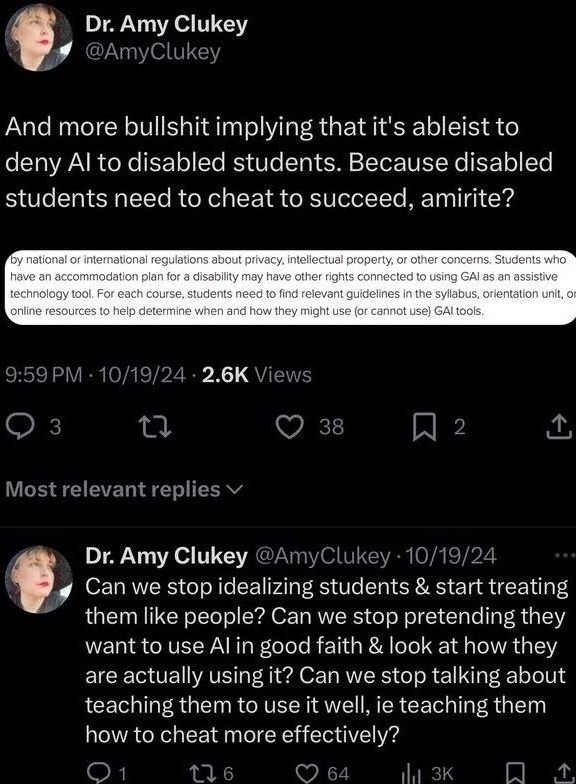

Clukey added to her plethora of X posts regarding AI by addressing the conversations surrounding Artificial Intelligence and accessibility for disabled students. One may think, “Artificial Intelligence is probably a great tool for disabled people”. Clukey shuts that down, as a disabled person herself. She claims that encouraging or turning a blind eye to disabled students using AI is doing them no favors. And, basically telling the world that because they are disabled, they should be allowed to “cheat” because they “need to”. To her point, this is a dangerous narrative to present to the world. If we, as a collective, decide that Artificial Intelligence is a tool, then we effectively open up a Pandora’s box of problems for people who will claim they need to utilize it. What are we defining as a “tool” anyway?

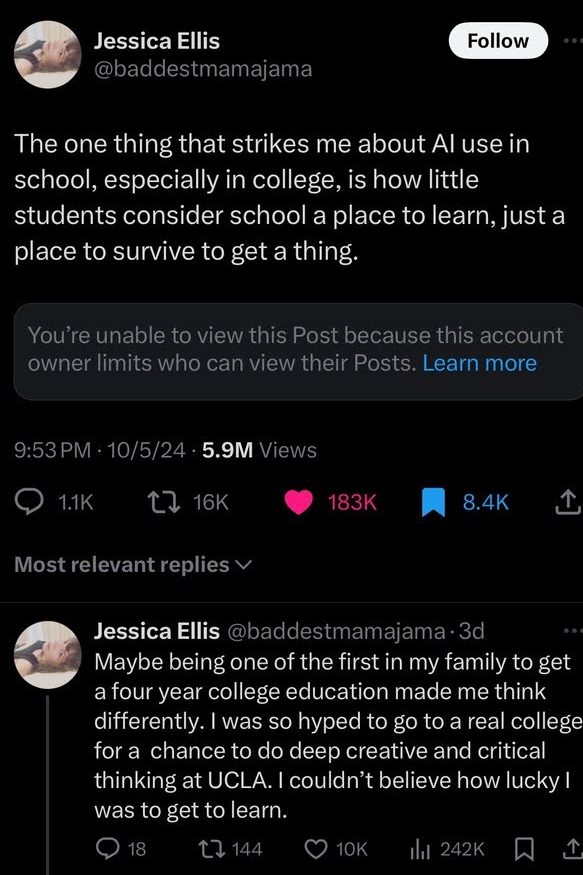

Jessica Ellis’s explanation of Artificial Intelligence in college is shocking. She claims that students are now only viewing college as the “place where you get the thing” instead of learning institutions. She further argues that her experience as a first-generation college student likely contributes to the value and gratefulness she held towards going to college, viewing it as the educational opportunity of a lifetime instead of a box to check off.

Gina Ellis hits a different angle when discussing AI. Ellis says she’s worried that the increased usage of Artificial Intelligence by others will begin to affect everyone else. She claims that other people’s usage diminishes others’ ability to discern between what’s real or not. Ellis is moving into the conversations surrounding AI literacy, a new topic frequently discussed in academia. Liz Dente replies with her thoughts, stating she always thought she was good at telling fact from fiction. Now, she feels unsure and is certain others do, too. I wonder how long we will discuss the challenges of separating fake AI-generated media and real media before it gets too hard, the general population is uninterested in changing, and we begin to accept AI as our new reality.

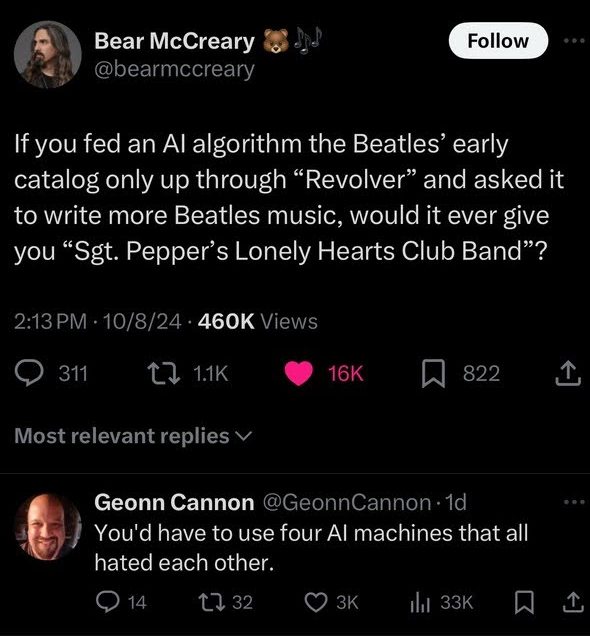

Bear McCreary posts a joke to X. But he makes an excellent point for discussion. Artificial Intelligence cannot replicate the Beatles nor pre-emptively create music that had not yet been recorded. Therefore, does that mean Artificial Intelligence should be a trusted source of content generation? Geonn Cannon replies with another joke. He suggests the only way to replicate the human creative process is to mimic the Beatles’ group dynamic of disdain. As of December 2024, AI has not yet become sentient. Let’s hope it remains this way.

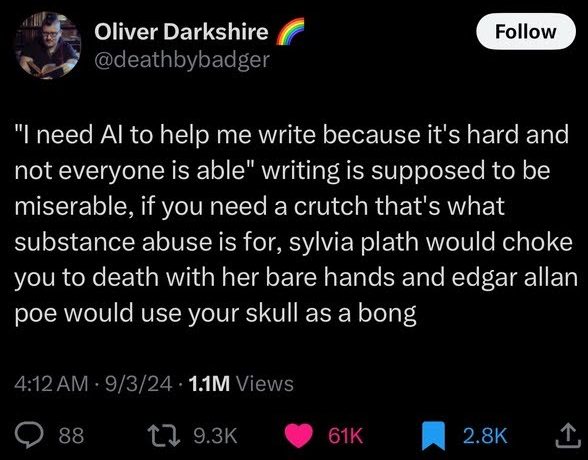

Oliver Darkshire posts another joke to X. He theorizes that if you think writing things yourself is “hard,” you should think again. Darkshire claims that if Sylvia Plath or Edgar Allen Poe were alive and heard this, they would take measures to ensure you understood how ridiculous that statement is. His joke does bring up a good point about integrity and authenticity. If we allow writers now to use AI and be, effectively, less authentic, what does that mean for legacy? Does that make Plath or Poe all the more remarkable? Or will we revere writers who use AI in the same way, offending both Plath and Poe?

This poster takes a Fahrenheit 451 approach to Artificial Intelligence. They claim that anyone who wants to “save time” by using AI should remember that the humanities are what make us free. People often misinterpret Fahrenheit 451 as a cautionary tale of government imposed censorship. However, the book is truly about a people who grew bored of philosophy and, in reaction, give up.

Marie Pruitt highlights the political, economic aspects of Artificial Intelligence in the college classroom. She asks why complain about defunding of the humanities and then allow AI to exist in the classroom? Her analysis appreciates the fact that AI is not going to improve the value of the humanities, but suggests it will dilute it.

Club Chalamet is a notable figure online who frequently posts creative and evocative captions. Her response to the mere suggestion that she uses AI is poignant. She says it’s cheating and a disgrace to the hard work and money she dedicated to getting a Masters in Communication. She ends with the statement “The shit I write is the shit I write.”

Conclusion

It is important to remember that these X posts were hand-chosen and all lean towards an anti-AI stance, and many, many posts on X have a positivist outlook on Artificial Intelligence. However, these posts are not to be discounted. All of these posts examined, especially together, showcase an anxiety or uncertainty that exists in many people surrounding Artificial Intelligence, in and outside of the classroom. These anxieties demonstrate the direct impacts of AI being seen in students and the general public. With these in mind, my recommendations for dealing with AI in the classroom are as follows.

- To prevent cheating– paper assignments. Yes, they are a lot of paper. But, having to turn something in that was hand-written on paper makes it more difficult to utilize AI to cheat.

- To encourage critical thinking, demonstrate how AI can be incorrect. Show students examples of when AI has made mistakes and how likely it is to do it again. Then, talk about what the implications of misinformation via AI could be on the general public, especially young people.

- To preserve reading and writing skills, assign books to be read. If it makes sense in your classroom, assigning a nonfiction or fiction reading for the semester could encourage students to read. If you base assignments and discussions around specific instances in the book and ask for analysis in class, it’ll be more difficult for students to cheat with AI.

- Last, but not least, no phones and no computers. People will say this hinders accessibility. I say, allowing students to become reliant on them hinders students’ ability to do things themselves. If students are not allowed their devices in class, they will be forced to not only participate but to slowly re-train their attention spans to extend more than a few seconds. Of course, there may be a unique instance where a student requires a device, I am not talking about those instances.

In the end, I have not been a teacher in my classroom yet. Additionally, I do not have the experience that many educators have with students and attempting some of these recommendations. Therefore, my evaluation of these concerns and recommendations are solely based on the figures above and minimal lived experience. In the end, whether we should abolish or encourage AI in the classroom, we all need to make a concerted effort to challenge anything that could potentially stand in the way of a student’s ability to learn everything they can.

Fuck ChatGPT.

References

Figure 1. [@AmyClukey]. (2024, October 4). I’m no longer a teacher. I’m just a human plagiarism detector. I used to spend my grading time giving comments for improving writing skills. Now most of that time is just checking to see if a student wrote their own paper. What a waste of life. [Post]. X.

Figure 2. [@AmyClukey]. (2024, October 19). And more bullshit implying that its abelist to deny AI to disables students. Because disabled students need to cheat to succeed, amirite?. Can we stop idealizing students & start treating them like people? Can we stop pretending they want to use AI in good faith & look at how they are actually using it? Can we stop talking about teaching them to use it well, ie teaching them how to cheat more effectively? [Post]. X.

Figure 3. [@Baddestmamajama]. (2024, October 5). The one thing that strikes me about AI use in school, especially in college, is how little students consider school a place to learn, just a place to survive to get a thing. Maybe being one of the first in my family to get a four year college education made me think differently. I was so hyped to go to a real college for a chance to do deep creative and critical thinking at UCLA. I couldn’t believe how lucky I was to get to learn. [Post]. X.

Figure 4. [@GinaEllis4]. (2024, October 8). I am afraid that, added to climate catastrophe, unchecked virus spread, political nonsense and wars, we face and onslaught of AI which will sweep sway all ability to discern, however dimly, our reality. [Post]. X.

[@Liz_dente]. (2024, October 8). I am deeply worried about the same. I’ve always been pretty good about being able to spot the lies and fakes (big reader, art and comm background) but lately I’ve been less certain/without ability to check against any real sources. If I’M struggling, I know other are for sure. [Post]. X.

Figure 5. [@Bearmccreary]. (2024, October 8). If you read an AI algorithm the Beatles’ early catalog only up through “Revolver” and asked it to write more Beatles music, would it ever give you “Sgt. Pepper’s Lonely Hearts Club Band”?. [Post]. X.

[@GeonnCannon]. (2024, October 8). You’d have to use for AI machines that all hated each other. [Post]. X.

Figure 6. [@Deathbybadger]. (2024, September 3). “I need AI to help me write because it’s hard and not everyone is able” writing is supposed to be miserable, if you need a crutch that’s what substance abuse is for, sylvia plath would choke you to death with her bare hands and edgar allan poe would use your skull as a bong. [Post]. X.

Figure 7. [@SketchesbyBoze]. (2024, October 17). These people are giving the game away. They want to create a world in which no one has the desire to read, create or think, and eventually no one has the capacity to. Reading, critical thinking, the humanities, are never wastes of time; they are what makes us free. [Post]. X.

Figure 8. [@MariePruitt_CR]. (2024, November 2). You can’t lament about the defuding of humanities majors and then also promote AI use in the classroom. Why do you think the same tech companies that create AI are trying to privatize public universities? It’s certainly not to support humanities programs. [Post]. X.

Figure 9. [@ClubChalamet]. (2024, November 18). This is oddly flattering, but no, I don’t use AI to write my captions. I hate AI to assist people in writing. It’s cheating, and its offensive to my student loans that paid for my MA in Communications. So no, the shit I write is the shit I write. [Post]. X.

McMurtrie, B. (2024). Cheating Has Become Normal. The Chronicle of Higher Education. https://www.chronicle.com/article/cheating-has-become-normal

Leave a reply to Stephen Spanellis Cancel reply